This Week in AI News

This Week in AI | 9/28/24

The AI revolution in overdrive! The tech world is buzzing with daily AI announcements! From OpenAI, Meta LLama, & Google, we're witnessing a major shift in AI capabilities.

Here's everything from this week's key highlights:

Top AI News

1. Big News from OpenAIAnnouncing that Advanced Voice Mode is rolling out to all ChatGPT Plus & Team users in the app this week! Enhancing experiences with Custom Instructions, Memory, 5 new voices, & improved accents.

OpenAI's announcement of Advanced Voice Mode (AVM) for ChatGPT marks a significant leap forward in AI-powered conversational interfaces. This update, rolling out to all ChatGPT Plus and Team users in the app, brings several key enhancements that are poised to revolutionize user interactions with the chatbot. The integration of Custom Instructions and Memory features into AVM is particularly noteworthy, allowing users to personalize their interactions and enabling ChatGPT to recall previous conversations, potentially leading to more coherent and continuous dialogues over time.

The introduction of five new voices - Arbor, Maple, Sol, Spruce, and Vale - expands the total number of available voices to nine, offering users more choices to suit their preferences or specific use cases. Additionally, AVM now boasts improved accent recognition and supports over 50 languages, significantly broadening the accessibility of ChatGPT and making it a more globally inclusive tool. These enhancements position ChatGPT as a versatile assistant for diverse user bases worldwide.

The rollout of AVM has far-reaching implications across various sectors. In education, it can facilitate language learning and provide interactive tutoring experiences. For businesses, AVM can be used for simulating negotiations and enhancing communication skills. In healthcare, the technology could be applied in creating medical assistants for patient care and treatment tracking. The entertainment and creative industries could leverage AVM's ability to perform monologues and adapt to different speaking styles for scriptwriting and voice acting applications.

Despite its advancements, AVM comes with certain limitations. It's not yet available in several regions, including the EU and UK, and there are daily usage limits. The feature is also not optimized for use in vehicles. These constraints suggest that while powerful, the technology is still evolving and may require further refinement for broader applications.

OpenAI's Advanced Voice Mode for ChatGPT represents a significant step forward in making AI interactions more natural, personalized, and versatile. By combining improved voice capabilities with custom instructions and memory features, OpenAI is pushing the boundaries of what's possible in AI-human interactions. As this technology continues to evolve, we can expect to see even more innovative applications and use cases emerge across various industries and domains, further solidifying ChatGPT's position at the forefront of conversational AI technology.

2. Meta Releases LLama 3.2

New lightweight models, 1B & 3B, bring AI to edge devices, supporting on-device tasks like summarization. 11B & 90B models can process both text & images, rivaling top competitors. + LLama Guard & Stack, simplifies & secures building AI apps.

Meta's release of Llama 3.2 marks a significant leap forward in the field of artificial intelligence, introducing a range of models that cater to diverse computing environments and use cases. The new lineup includes lightweight models (1B and 3B parameters) designed specifically for edge and mobile devices, enabling on-device AI capabilities with minimal latency and resource overhead. These smaller models can support advanced tasks such as summarization, instruction following, and rewriting, all while maintaining a context window of up to 128K tokens. This development is particularly noteworthy as it brings powerful AI capabilities to resource-constrained environments, potentially revolutionizing areas like mobile computing and IoT.

At the other end of the spectrum, Llama 3.2 introduces larger multimodal models (11B and 90B parameters) capable of processing both text and high-resolution images. This multimodal capability opens up new possibilities for applications such as image-based search, content generation, and interactive educational tools. Remarkably, the 90B model has demonstrated performance that outpaces industry leaders like Claude 3-Haiku and GPT-4o-mini on various benchmarks, including the MMLU test. This competitive edge positions Llama 3.2 as a formidable player in the AI landscape, challenging established models from OpenAI and Anthropic.

Meta has also prioritized safety and accessibility in the development of Llama 3.2. The introduction of Llama Guard and Llama Stack simplifies the process of building secure AI applications. These features, along with optimized versions for specific hardware platforms like Qualcomm, MediaTek, and Arm-based processors, demonstrate Meta's commitment to responsible AI development and widespread adoption. By making these models open-source and accessible through various cloud platforms like Google Cloud's Vertex AI, Amazon Bedrock, and others, Meta is fostering innovation and enabling developers and enterprises to leverage state-of-the-art AI capabilities in their applications. This approach not only democratizes access to advanced AI but also encourages collaborative improvement and adaptation of the models across diverse use cases and industries.

3. Google Supercharges Gemini

New production-ready Gemini 1.5 Pro & Flash models with improved long-context understanding

Google's latest update to its Gemini AI models represents a significant leap forward in the field of artificial intelligence, demonstrating the company's commitment to advancing and democratizing AI technology. The release of production-ready versions of Gemini 1.5 Pro and Flash models marks a notable improvement in long-context understanding, with both models now capable of processing up to 1 million tokens by default. This enhancement allows for the analysis of vast amounts of data, including hours of video, audio, or thousands of lines of code in a single prompt, greatly expanding the models' potential applications across various industries.

The introduction of Gemini 1.5 Flash is particularly noteworthy, as it offers a lightweight alternative optimized for speed and efficiency while still maintaining impressive capabilities. This model is designed to handle high-volume, high-frequency tasks at scale, making it ideal for applications that require quick response times and cost-effective processing. Despite its smaller size compared to 1.5 Pro, Flash demonstrates strong performance in multimodal reasoning and maintains the breakthrough long context window, making it a versatile option for developers and enterprises alike.

Google's decision to reduce the pricing for Gemini 1.5 Pro by over 50% is a game-changing move that significantly lowers the barrier to entry for advanced AI capabilities. This price reduction, coupled with more than doubled rate limits, enables developers and businesses to leverage Gemini's powerful features more extensively and cost-effectively. The pricing strategy reflects Google's aim to make state-of-the-art AI technology more accessible to a broader range of users and applications.

Furthermore, the adjustment to generate 5-20% shorter default responses addresses user feedback and improves the models' usability. This change is likely to enhance the efficiency of interactions with Gemini, making it easier for developers to integrate the AI into various applications without overwhelming users with excessive information. The combination of these updates - improved models, reduced pricing, increased rate limits, and optimized response lengths - positions Google's Gemini as an increasingly attractive option in the competitive landscape of AI models, potentially accelerating the adoption and integration of AI across diverse sectors.

4. OpenAI Leadership Shakeup

CTO Mira Murati, announced her departure after 6.5 years. Chief Research Officer Bob McGrew and VP of Research Barret Zoph also left. This follows recent departures of other execs, leaving CEO Sam Altman as the last remaining leader.

The recent leadership shakeup at OpenAI marks a significant turning point for the pioneering artificial intelligence company, with the departure of several key executives signaling a period of transition and potential realignment. The announcement that Chief Technology Officer Mira Murati, Chief Research Officer Bob McGrew, and Vice President of Research Barret Zoph are leaving the company simultaneously is particularly noteworthy, given their critical roles in OpenAI's technological development and research initiatives. Murati's departure is especially impactful, considering her six-and-a-half-year tenure and her brief stint as interim CEO during the tumultuous period of Sam Altman's temporary ousting in November 2023.

This latest wave of departures follows a series of high-profile exits from OpenAI over the past year, including co-founders and other senior executives. The exodus has left CEO Sam Altman as one of the few remaining original leaders, potentially concentrating power and decision-making authority in his hands. This concentration of leadership could lead to a more streamlined decision-making process, but it also raises questions about the diversity of perspectives at the top of the organization and the potential impact on OpenAI's innovative culture.

The timing and scale of these departures suggest underlying tensions or disagreements within the company, possibly related to its strategic direction, ethical considerations in AI development, or the balance between commercial interests and the organization's original mission. It's worth noting that some former executives have cited concerns about OpenAI's priorities and approach to AI safety as reasons for their departures. This leadership vacuum could pose challenges for OpenAI in maintaining its competitive edge and continuing its rapid pace of innovation in the highly dynamic field of artificial intelligence.

As OpenAI navigates this transition, the company faces the dual challenges of maintaining its technological leadership in the AI industry while also addressing concerns about governance, ethics, and the responsible development of AI technologies. The success of this transition will likely depend on Altman's ability to rebuild the leadership team, align the organization around a clear vision, and address any underlying issues that may have contributed to the wave of departures. How OpenAI manages this period of change could have significant implications not only for the company's future but also for the broader landscape of AI development and its impact on society.

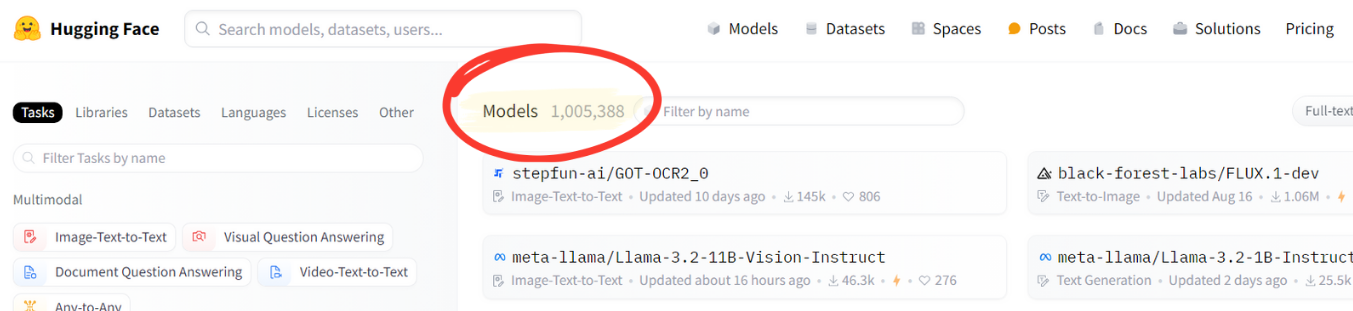

5. Hugging Face Hits a Milestone

Hugging Face has officially crossed the remarkable milestone of 1 million AI models. This achievement highlights the rapid growth of open-source AI. The rise in models highlights ML's evolution, with new models being created every 10 seconds.

Hugging Face's achievement of hosting over 1 million AI models marks a significant milestone in the field of artificial intelligence and machine learning. This remarkable feat underscores the platform's pivotal role in democratizing AI technology and fostering a vibrant, collaborative community of developers and researchers. Since its transformation from a chatbot app to an open-source AI model hub in 2020, Hugging Face has become the go-to platform for hosting, sharing, and discovering AI models across various domains and applications

The exponential growth in the number of models on Hugging Face reflects the rapid pace of innovation and development in the AI industry. With new models being created every 10 seconds, the platform is witnessing an unprecedented surge in AI research and development activities. This growth is not just about quantity; it represents a shift towards more specialized and task-specific models, as noted by Hugging Face CEO Hermann Delang. The prevalence of smaller, customized models optimized for specific uses, domains, and languages indicates a trend towards more efficient and targeted AI solutions.

The diversity of models available on Hugging Face is particularly noteworthy. From widely-used models like Meta LLama, Microsoft, and MistralAI to countless specialized models, the platform caters to a broad spectrum of AI applications. This variety enables developers and researchers to explore, experiment with, and build upon existing models, accelerating the pace of innovation in the field. The platform's open-source nature and accessibility have created a thriving ecosystem where cutting-edge machine learning techniques are combined with real-world applications, fostering a community-driven approach to AI development.

As Hugging Face continues to grow, it is poised to play an increasingly crucial role in shaping the future of AI. The platform's success in reaching this milestone not only demonstrates the rapid advancement of AI technology but also highlights the power of open collaboration in driving innovation. With the AI community's continued engagement and contribution, Hugging Face is likely to remain at the forefront of AI development, facilitating groundbreaking research and practical applications across various industries and domains.

6. Google's NotebookLM Gets a Major Upgrade

NotebookLM now has exciting new features:

YouTube Integration - Upload video URLs for instant summaries

Audio File Support - Add .mp3 & .wav files for quick insights

Expanded sources now supports PDFs, Google Docs, Slides, & webpages.

Google's NotebookLM has taken a significant leap forward with its latest update, introducing features that expand its capabilities and enhance its utility as an AI-powered research and note-taking tool. The addition of YouTube integration stands out as a game-changing feature, allowing users to simply upload video URLs and receive instant summaries of the content. This functionality not only saves time but also enables users to quickly grasp key concepts from lengthy videos, making it an invaluable tool for students, researchers, and professionals alike. The ability to generate summaries from video transcripts opens up new possibilities for content analysis and information extraction from the vast repository of knowledge available on YouTube.

Complementing the YouTube integration is the new support for audio files, including popular formats like MP3 and WAV. This feature extends NotebookLM's reach into the realm of audio content, enabling users to gain quick insights from podcasts, lectures, and other audio recordings. By transcribing and analyzing audio content, NotebookLM can provide summaries and key takeaways, making it easier for users to process and retain information from auditory sources. This addition is particularly beneficial for auditory learners and professionals who frequently work with audio content.

The expansion of supported source types to include PDFs, Google Docs, Slides, and webpages further solidifies NotebookLM's position as a comprehensive research and note-taking platform. This broad range of supported formats allows users to consolidate information from various sources within a single tool, streamlining the research process and enabling more efficient knowledge management. The ability to seamlessly integrate content from different file types and platforms makes NotebookLM a versatile solution for diverse use cases, from academic research to business analysis and content creation. As Google continues to enhance NotebookLM's capabilities, it is positioning the tool as an indispensable asset for anyone looking to leverage AI in their information processing and knowledge work workflows.

7. OpenAI Extends Free Fine-Tuning & Boosts Rate Limits

OpenAI's recent announcement of extended free fine-tuning and increased rate limits represents a significant boost for developers and researchers working with their AI models. By extending free fine-tuning until October 31st, OpenAI is providing an extended opportunity for users to customize models to their specific needs without incurring additional costs. This move is particularly beneficial for those exploring the capabilities of AI in various domains, as it allows for more extensive experimentation and development without financial constraints.

The increase in daily free training tokens is a substantial upgrade, with GPT-4o now offering 1 million tokens per day and GPT-4o mini providing 2 million tokens per day. This expansion in free resources enables more comprehensive model training, potentially leading to improved performance and accuracy in specialized tasks. The raised limits for Tier 5 users, now allowing up to 10,000 requests per minute for select models, cater to high-volume users and enterprise-level applications, facilitating more efficient and scalable AI implementations.

Furthermore, the across-the-board boost in rate limits, reaching up to 50 times the previous limits for various models, signifies OpenAI's commitment to supporting more demanding and complex AI applications. This increase in throughput capacity allows for more responsive and real-time AI-powered services, opening up new possibilities for applications that require high-frequency interactions or large-scale data processing. Overall, these enhancements demonstrate OpenAI's efforts to make their AI technologies more accessible, flexible, and powerful for a wide range of users and use cases, potentially accelerating innovation and adoption of AI across various industries.

8. Introducing OrionAR Glasses at Meta Connect 2024

These sleek glasses promise to blend digital experiences seamlessly with the real world, enhancing everything from gaming to productivity.

Meta's unveiling of OrionAR Glasses at Connect 2024 marks a significant milestone in the evolution of augmented reality technology, representing a leap forward in the company's vision for the future of computing and human interaction. These sleek glasses are designed to seamlessly integrate digital experiences with the physical world, offering a range of applications from immersive gaming to enhanced productivity tools. The promise of blending virtual elements with reality in a lightweight, wearable form factor has been a long-standing goal in the tech industry, and Meta's OrionAR appears to be a substantial step towards realizing this ambition.

The emphasis on immersive AR experiences suggests that Meta has made significant advancements in display technology, likely incorporating high-resolution micro-displays and advanced optics to create convincing digital overlays. This could potentially transform how users interact with information, entertainment, and their surroundings, offering contextual data and interactive elements that enhance daily activities. The lightweight design is a crucial factor in the potential success of OrionAR, as comfort and wearability are essential for any device intended for prolonged use. Meta's ability to pack advanced technology into a form factor that resembles traditional eyewear could be a game-changer in terms of user adoption and social acceptability.

Advanced connectivity features hint at the glasses' ability to seamlessly integrate with other devices and networks, possibly leveraging 5G or other high-speed wireless technologies. This connectivity is likely crucial for enabling real-time data processing, cloud-based applications, and smooth interactions with the digital world. However, while the announcement of OrionAR is exciting, it's important to consider potential challenges such as battery life, privacy concerns, and the need for compelling software ecosystems to drive adoption. As Meta continues to invest heavily in AR and VR technologies, OrionAR represents a significant step towards their vision of a more immersive and connected future, potentially reshaping how we interact with technology and each other in the coming years.

LIked this article? Find more AI and ML news here: ML AI News.

by ML & AI News

6,430 views

Machine Learning Artificial Intelligence News

https://machinelearningartificialintelligence.com

AI & ML

Sign Up for Our Newsletter