Edge ML Overview

Edge machine learning or edge ML refers to the process of running machine learning (ML) models on an edge device to collect, process, and recognize patterns within collections of raw data. Throughout this article we'll take a deeper dive on edge ML and its capabilities.

What is machine learning at the edge (Edge ML)?

To best explain edge ML, let’s start by breaking down the two components that make it up: machine learning, and edge computing.

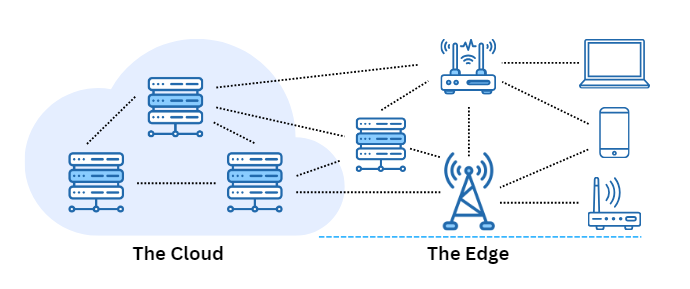

Machine learning is a subset of artificial intelligence (AI) in which the AI is able to perform perceptive tasks within a fraction of the time it would take a human. Edge computing refers to the act of bringing computing services physically closer to either the user or the source of the data. In most cases, processing and storing data on remote servers, especially internet servers, is known as "cloud computing." The edge includes all computing devices not part of the cloud. These computing services exist on what we call edge devices, a computer that allows for raw data to be collected and processed in real-time, resulting in faster, more reliable analysis. Machine learning at the edge brings the capability of running machine learning models locally on edge devices, such as the Internet of Things (IoT).

ML at the edge vs. IoT

There is a common confusion when it comes to machine learning on the Edge and the Internet of Things (IoT), therefore it’s important to clarify how edge ML is different from IoT and how they both could come together to provide a powerful solution in certain cases. An edge solution that uses ML at the edge has two main components: an edge application and an ML model (invoked by the application) running on the edge device. Edge ML is about controlling the lifecycle of one or more ML models deployed to a fleet of edge devices.Edge computing

Edge computing includes personal computers and smartphones in addition to embedded systems (such as those that comprise the Internet of Things). To make all of these devices smarter and less reliant on backend servers, we turn to edge machine learning. Depending on how far the device is from the cloud or a big data center (base), three main characteristics of the edge devices need to be considered to maximize performance and longevity of the system: computing and storage capacity, connectivity, and power consumption. The following diagram shows three groups of edge devices that combine different specifications of these characteristics, depending on how far from they are from the base.The groups are:

The edge machine learning mechanism is not responsible for the application lifecycle. A different approach should be adopted for that purpose. Decoupling the ML model lifecycle and application lifecycle gives you the freedom and flexibility to keep evolving them at different paces.

But how does IoT correlate to EdgeML?

IoT relates to physical objects embedded with technologies like sensors, processing ability, and software. These objects are connected to other devices and systems over the internet or other communication networks, in order to exchange data. The concept was initially created when thinking of simple devices that just collect data from the edge, perform simple local processing, and send the result to a more powerful computing unity that runs analytics processes that help people and companies in their decision-making.The Internet of Things

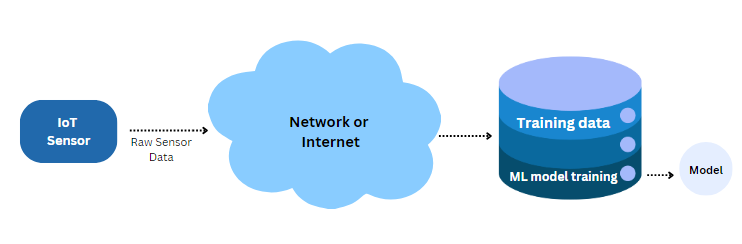

The Internet of Things (IoT) is the collection of sensors, hardware devices, and software that exchange information with other devices and computers across communication networks. We often think of IoT as a series of sensors with WiFi or Bluetooth connectivity that can relay to us information about the environment. For many years, IoT was known as “machine to machine” (M2M). It involved connecting sensors and automating control processes between various computing devices, and it saw wide adoption in industrial machines and processes. Machine learning offers the ability to create further advancements in automation by introducing models that can make predictions or decisions without human intervention. Due to the complex nature of many machine learning algorithms, the traditional integration of IoT and ML involves sending raw sensor data to a central server, which performs the necessary inference calculations to generate a prediction.For low volumes of raw data and complex models, this configuration may be acceptable. However, there are several potential issues that arise:

To counter the need to transmit large amounts of raw data across networks, data storage and some computations can be accomplished on devices closer to the user or sensor, known as the “edge.” Edge computing stands in contrast to cloud computing, where remote data and services are available on demand to users.

Edge and embedded machine learning

Advances in hardware and machine learning have paved the way for running deep ML models efficiently on edge devices. Complex tasks, such as object detection, natural language processing, and model training, still require powerful computers. In these cases, raw data is often collected and sent to a server for processing. However, performing ML on low-power devices offers a variety of benefits:Edge ML encompasses personal computers, smartphones, and embedded systems. Within this realm, embedded ML, or tinyML, specifically targets running machine learning algorithms on embedded systems, such as microcontrollers and headless single-board computers.

Typically, training a machine learning model demands significantly more computational resources than performing inference. Consequently, we often rely on powerful server farms for training new models. This involves gathering data from the field through sensors, scraping internet images, and other methods to create a dataset, which is then used to train the machine learning model.

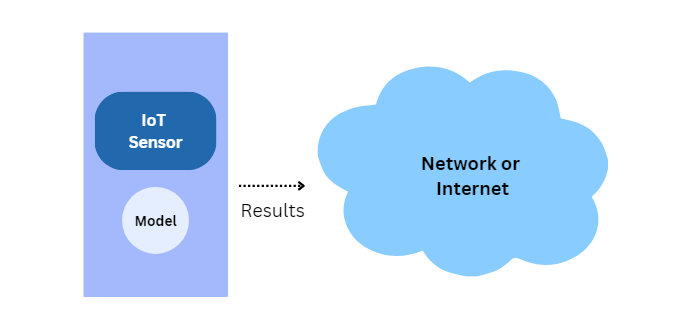

Once a model is trained, it can be deployed to a smart sensor or other edge device. This involves writing firmware or software that uses the model to gather new raw sensor readings, perform inference, and take action based on the results.

These actions might include autonomously driving a vehicle, moving a robotic hand, or notifying a user of faulty equipment. Since inference is performed locally on the edge device, a network connection is not required.

How to create an edge strategy

Edge computing is an important part of an open hybrid cloud vision that allows you to achieve a consistent application and operations experience across your entire architecture through a common, horizontal platform. While a hybrid cloud strategy allows organizations to run the same workloads in their own data centers and on public cloud infrastructure (like AWS, GCP, or Azure), an edge strategy extends even further, allowing cloud environments to reach locations that are too remote to maintain continuous connectivity with the datacenter.Because edge computing sites often have limited or no IT staffing, a reliable edge computing solution is one that can be managed using the same tools and processes as the centralized infrastructure, yet can operate independently in a disconnected mode. In general, comprehensive edge computing solutions need to be able to:

Challenges

It’s common to have ML edge scenarios where you have hundreds or thousands (maybe even millions) of devices running the same models and edge applications. When you scale your system, it’s important to have a robust solution that can manage the number of devices that you need to support. This is a complex task and for these scenarios, you need to ask many questions:The Benefits of AI/ML at the Edge

Artificial intelligence and machine learning have rapidly become critical for businesses as they seek to convert their data to business value. Edge computing solutions focus on accelerating these business initiatives by providing services that automate and simplify the process of developing intelligent applications in the hybrid cloud. Today’s landscape recognizes that as data scientists strive to build their AI/ML models, their efforts are often complicated by a lack of alignment between rapidly evolving tools. This, in turn, can affect productivity and collaboration among their teams, software developers, and IT operations. To sidestep these potential hurdles, platform services are built to provide support for users to design, deploy, and manage their intelligent applications consistently across cloud environments and datacenters.Edge ML is enabling technologies in new areas and allowing for novel solutions to problems. Some of these applications will be visible to consumers (such as keyword spotting on smart speakers) while others will be transforming our lives in invisible ways (such as smart grids delivering power more efficiently).

Learn more about Edge ML here: Edge ML.

Machine Learning Artificial Intelligence News

https://machinelearningartificialintelligence.com

AI & ML

Sign Up for Our Newsletter