Exploring Deep Learning with Python: From Basics to Advanced Applications

Deep Learning for Python

When you think about the power of computers, what comes to mind? Perhaps it's their ability to solve complex problems, analyze massive datasets, or even recognize patterns and make predictions. Behind these impressive feats often lies the field of deep learning, a subset of machine learning, which is revolutionizing how we interact with technology. And one of the most accessible and versatile tools for diving into deep learning is Python. We'll explore what deep learning is, how Python is used in this field, and some of its fascinating applications.

What is Deep Learning?

Deep learning is a branch of machine learning that mimics the workings of the human brain to process data and create patterns for decision making. It involves neural networks with many layers (hence "deep") that can learn and make intelligent decisions on their own. Unlike traditional machine learning algorithms that may plateau in performance as data size increases, deep learning models continue to improve with more data and more layers.

Why Python for Deep Learning?

Python has become the go-to language for deep learning for several reasons:

Ease of Learning and Use - Python's syntax is straightforward, making it an excellent choice for both beginners and experienced developers. Its readability and simplicity allow researchers and developers to focus on solving problems rather than getting bogged down in complex syntax.

Extensive Libraries and Frameworks - Python offers a plethora of libraries such as TensorFlow, Keras, PyTorch, and Theano, which simplify the process of building complex neural networks. These libraries provide high-level abstractions that make it easier to design, train, and evaluate deep learning models.

Community and Support - The Python community is vast and active, providing extensive resources, tutorials, and forums for troubleshooting and collaboration. This ecosystem of support is invaluable for both newcomers and experts in the field.

Integration with Data Science Tools - Python seamlessly integrates with other data science tools and libraries, making it easy to perform data preprocessing, visualization, and analysis alongside deep learning tasks.

Performance - While Python is an interpreted language, which can be slower than compiled languages, many deep learning libraries are optimized for performance. They often use C or C++ backends for computationally intensive operations, providing the ease of Python with the speed of lower-level languages.

Flexibility - Python's flexibility allows for rapid prototyping and experimentation, which is crucial in the fast-paced field of deep learning research.

Getting Started with Python for Deep Learning

To get started with deep learning in Python, you'll need a few key tools and libraries. Here's a quick overview of some essential components:

Setting Up Your Environment

To set up your Python environment for deep learning:bash: pip install numpy pandas matplotlib seaborn tensorflow scikit-learn jupyterFor PyTorch, you may need to visit their website to get the appropriate installation command for your system.

Basic Deep Learning Workflow

A typical deep learning workflow in Python involves the following steps:Data Preparation

Model Design

Model Compilation

Training

Evaluation

Assess the model's performance on the test setPrediction and Deployment

Here's a simple example using Keras to create a basic neural network for binary classification:

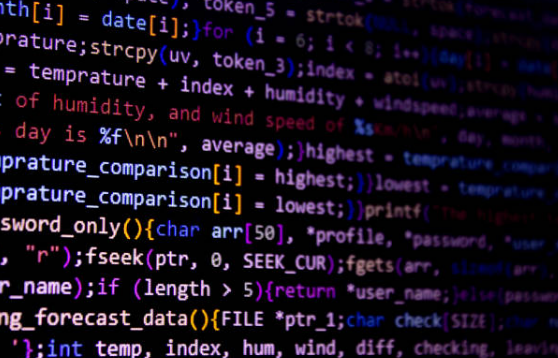

python: from tensorflow import keras

from tensorflow.keras import layers

# Define the model

model = keras.Sequential([

layers.Dense(64, activation='relu', input_shape=(input_dim,)),

layers.Dense(32, activation='relu'),

layers.Dense(1, activation='sigmoid')

])

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Train the model

history = model.fit(X_train, y_train, epochs=100, batch_size=32, validation_split=0.2)

# Evaluate the model

test_loss, test_acc = model.evaluate(X_test, y_test)

print(f'Test accuracy: {test_acc}')This example demonstrates the simplicity and power of using Python for deep learning tasks.

Advanced Deep Learning Techniques

As you dive deeper into deep learning, you'll encounter more advanced techniques and architectures, such as:

Convolutional Neural Networks (CNNs) - Ideal for image recognition and computer vision tasks.

CNNs are suited for image recognition and computer vision tasks. They use convolutional layers to automatically and adaptively learn spatial hierarchies of features from input images.

Key components of CNNs include:

Convolutional layers

Pooling layers

Fully connected layers

Example applications:

Image classification

Object detection

Facial recognition

Recurrent Neural Networks (RNNs) - Used for sequential data, like time series and NLP.

RNNs are used for sequential data, such as time series or natural language processing. They have connections that form directed cycles, allowing them to maintain an internal state or "memory".

Types of RNNs:

Long Short-Term Memory (LSTM)

Gated Recurrent Unit (GRU)

Example applications:

Language modeling

Machine translation

Speech recognition

Generative Adversarial Networks (GANs) - For generating new, synthetic data that resembles real data.

GANs consist of two neural networks (a generator and a discriminator) that compete against each other, resulting in the generation of new, synthetic data that resembles realistic data.

Components of GANs:

Generator network

Discriminator network

Example applications:

Image generation

Style transfer

Data augmentation

Transfer Learning - Leveraging pre-trained models on new, similar tasks to save time and resources.

Transfer learning leverages pre-trained models on new, similar tasks to save time and resources. It's particularly useful when you have limited data for your specific task.

Steps in transfer learning:

Select a pre-trained model

Freeze some or all layers of the pre-trained model

Add new layers for your specific task

Train the model on your data

Example applications:

Fine-tuning image classification models

Adapting language models to specific domains

Real-World Applications of Deep Learning with Python

The applications of deep learning are vast and continually expanding. Here are a few areas where deep learning with Python is making a significant impact:

Healthcare - Deep learning models are used for revolutionizing various aspects of healthcare such as diagnosing diseases, analyzing medical images, and even predicting patient outcomes.Finance - From fraud detection to algorithmic trading, deep learning helps in making more accurate financial predictions and decisions in various applications.

Automotive

The automotive industry, particularly in the development of self-driving cars, relies heavily on deep learning:

Natural Language Processing (NLP)

Deep learning models power applications like translation services, chatbots, and sentiment analysis and has dramatically improved various NLP tasks.

Computer Vision

Deep learning has revolutionized computer vision tasks in facial recognition, object detection and tracking, image and video generation, and scene understanding.

Robotics

Deep learning is advancing the field of robotics in motion Planning, Object Manipulation, and Human-Robot Interaction Deep learning is advancing the field of robotics:

Energy

The energy sector is leveraging deep learning for various applications:

Tools and Frameworks for Deep Learning in Python

Python’s ecosystem offers a variety of tools and frameworks that cater to different aspects of deep learning. Here are some of the most popular ones:

TensorFlow - Developed by Google Brain, TensorFlow is an open-source library that provides comprehensive tools for building and deploying deep learning models. It allows developers to build and train machine learning models using both CPUs and GPUs, making it highly scalable for both research and production environments. TensorFlow’s flexible architecture enables users to deploy models on various platforms, including cloud servers, mobile devices, and web applications.

Keras - Keras is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, Theano, or CNTK. It simplifies the process of building deep learning models, making it accessible to beginners while being powerful enough for experts. Keras supports both convolutional and recurrent networks and can easily run on both CPUs and GPUs.

PyTorch - PyTorch is a deep learning framework developed by Facebook’s AI Research lab (FAIR), known for its dynamic computation graph (define-by-run) that allows for flexibility in model design and debugging. yTorch has strong GPU acceleration, an intuitive interface, and native support for Python, which makes it a favorite in academic research and production alike.

Theano - Theano, developed by the MILA lab at the University of Montreal, was one of the pioneering deep learning frameworks and laid the foundation for many modern libraries, such as TensorFlow and PyTorch. While it is no longer actively developed, Theano remains a powerful tool for mathematical computations, specifically for defining, optimizing, and evaluating multi-dimensional arrays (tensors).

Scikit-learn - Scikit-learn is a versatile machine learning library built on top of NumPy, SciPy, and matplotlib. While not a deep learning library per se, Scikit-learn provides many tools for data preprocessing, model selection, and evaluation that are crucial for deep learning projects. Scikit-learn includes implementations of classical machine learning algorithms such as regression, clustering, and classification, making it an indispensable library for any data scientist working on a deep learning project.

Fastai - Built on top of PyTorch, Fastai aims to make deep learning more accessible by providing high-level abstractions and best practices. It provides pre-configured architectures, best practices, and state-of-the-art techniques that simplify model training, allowing users to achieve high performance with minimal effort. Fastai is highly modular, supporting a wide range of tasks such as image classification, NLP, and tabular data.

Caffe (Convolutional Architecture for Fast Feature Embedding) -

Developed by the Berkeley Vision and Learning Center (BVLC), Caffe is a deep learning framework with an emphasis on convolutional neural networks (CNNs) for image classification, segmentation, and other vision tasks. It is known for its speed and modularity, making it popular in both research and production settings. Written in C++ with a Python interface, Caffe supports GPU acceleration and is optimized for fast performance.

Chainer:

Chainer - is a deep learning framework developed by Preferred Networks. It is known for its "define-by-run" approach, which enables dynamic neural networks, meaning the network's computation graph is constructed on-the-fly. This makes it more intuitive and flexible than static graph frameworks. Chainer is widely used in research and supports CUDA for GPU-based computation.

Deeplearning4j (DL4J):

DL4J - is a Java-based deep learning library designed to work within the Java ecosystem, making it highly compatible with big data technologies like Apache Hadoop and Spark. DL4J is built to run on distributed hardware and is often used for production-level applications where scalability is crucial. It also supports a variety of neural networks, including CNNs and recurrent neural networks (RNNs).

Microsoft Cognitive Toolkit (CNTK):

Microsoft Cognitive Toolkit - Or CNTK, is a deep learning framework that allows users to easily build and train neural networks. It emphasizes efficiency and scalability, particularly in distributed environments, and supports a range of tasks such as speech, image, and text processing. CNTK has been used in a variety of Microsoft products and services. It supports multiple programming languages, including Python and C++.

Open Neural Network Exchange (ONNX):

ONNX - is an open-source framework that allows for the interchange of machine learning models between different frameworks. It was initially created by Facebook and Microsoft to provide an open standard for representing machine learning models. ONNX enables models trained in one framework to be transferred to another, fostering interoperability between frameworks like PyTorch, TensorFlow, and Caffe2.

Apache MXNet:

MXNet - is a scalable deep learning framework supported by Apache, known for its efficient and flexible architecture. It allows for both symbolic and imperative programming, making it easy for researchers to experiment while providing the performance needed for production applications. MXNet supports a range of neural networks and has strong GPU acceleration capabilities. It's also the framework of choice for Amazon Web Services (AWS) and integrates well with other AWS tools.

JAX:

JAX - developed by Google, is an accelerated library for high-performance numerical computing. It allows users to perform automatic differentiation of Python and NumPy code and can be used for machine learning, specifically for building and training neural networks. JAX is known for its composability and efficiency in handling machine learning models and other mathematical computations, taking advantage of hardware acceleration (e.g., GPUs and TPUs).

PaddlePaddle (PArallel Distributed Deep LEarning):

PaddlePaddle - is a deep learning platform originally developed by Baidu. It is particularly focused on ease of use, flexibility, and efficiency for industrial applications. PaddlePaddle supports a wide range of neural networks and is designed to run on distributed systems, making it highly scalable. It is popular in China and has been used in applications ranging from image recognition to natural language processing (NLP).

Sonnet:

Sonnet - by DeepMind, is a high-level deep learning library built on top of TensorFlow. Sonnet is designed to be simple and highly modular, allowing users to define and connect neural network components easily. While it provides a more abstract interface for building models, it still retains the power of TensorFlow's computation graph. It's often used internally at DeepMind for research in areas like reinforcement learning.

Navigating the Turns Ahead in Deep Learning

While deep learning has made remarkable progress, several challenges and exciting future directions remain:Data Efficiency

Deep learning models typically require large amounts of labeled data to perform well. Improving data efficiency is a key area of research.

Future directions:

Robustness and Generalization

Ensuring that deep learning models perform well on unseen data and are robust to adversarial attacks is an ongoing challenge.

Future directions:

Energy Efficiency

Training and deploying large deep learning models can be computationally intensive and energy-consuming.

Future directions:

The Synergy of Deep Learning and Python

The synergy between deep learning and Python is a testament to the power of combining advanced computational techniques with accessible programming tools. Python's extensive libraries and frameworks, such as TensorFlow, Keras, and PyTorch, provide the building blocks for developing sophisticated neural networks. These tools, coupled with Python's ease of use, allow for rapid prototyping, experimentation, and deployment of deep learning models.Conclusion

The road ahead for deep learning with Python is both exciting and challenging. As we continue to explore and harness the potential of these technologies, collaboration between researchers, developers, and industry professionals will be crucial. By fostering an environment of shared knowledge and ethical responsibility, we can ensure that deep learning not only advances technological capabilities but also contributes positively to society.Deep learning with Python is more than just a powerful combination of technology and programming; it is a catalyst for innovation and a driver of change. As we stand on the brink of new discoveries and applications, the possibilities are endless, and the journey has only just begun. Embracing this journey with curiosity, responsibility, and a commitment to ethical practices will pave the way for a future where deep learning enhances our world in meaningful and impactful ways.

Whether you're just starting or looking to advance your skills, the rich ecosystem of Python libraries and frameworks provides the tools you need to dive into deep learning. By exploring this field, you'll be at the forefront of technological advancements, ready to tackle some of the world's most challenging and intriguing problems.

Find more about AI and ML here: ML AI News.

Machine Learning Artificial Intelligence News

https://machinelearningartificialintelligence.com

AI & ML

Sign Up for Our Newsletter